Configuration Management Camp is the event for technologists interested Open Source Infrastructure automation and related topics. This includes but is not limited to top Open Source Configuration Management, Provisioning, Orchestration, Choreography, Container Operations, and many more topics.

I got 99 problems and a bash DSL ain’t one of them.

John Willis | @Botchagalupe | Red Hat

John Willis has worked in the IT management industry for more than 35 years. He shares his experience througout this presentation. Very opiniated and sometimes disturbing but also very recognizable.

Drunken History of Configuration Management

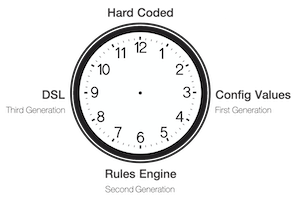

The Drunken History of Configuration Management. Mike Hadlow’s Configuration Complexity Clock again.

- Scripts at start

- Config Values (First Generation)

- Rules Engine ( Second Generation)

- DSL (Third Generation)

YAML

The article “In defense of YAML” provides a very critical look at YAML and how it (not) should be used.

YAML as data format is defensible. YAML as a programming language is not. If you’re programming, use a programming language. You owe it to Turing, Hopper, Dijkstra and the countless other computer scientists and practitioners who’ve built our discipline. And you owe it to yourself.

Infrastructure as Code

Infrastructure as Code (Pro’s)

- Abstraction DSL’s are very powerfull

- Self documenting

- High reusability code/modules

- Easier to providedata driven models

- Generally more consistent than scripted patterns

- Most major IaC products have good testing abstractions

Infrastructure as Code (Con’s)

- Abstraction DSL’s have a higher learning curve

- Complex edge case scenarios/failures

- Script/Shell primitives are used often

- Integration interfaces are more complex

- Infrastructure is built Just-in-Time (JiT)

- Just In Time infrastructure brings the risk of having different configuration in dev, test and prod.

- Knowns are not always Known

- Builds are convergent nt congruent

Not sure why it’s shown at this point just before he praises Docker …

Horses vs unicorns. The unicorns used LXC the horses need Docker 😉

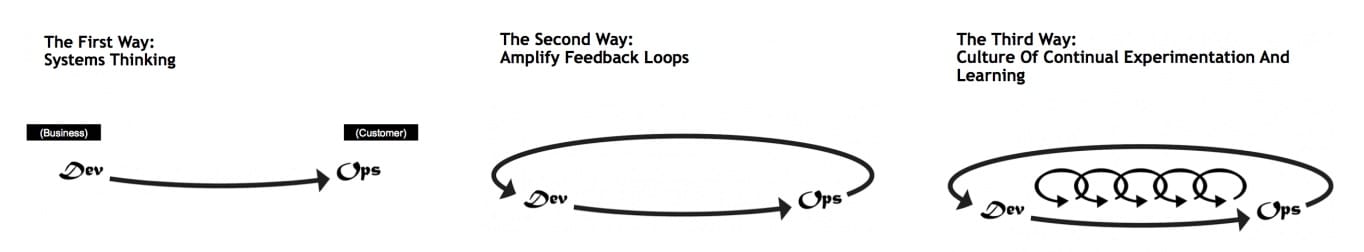

The Three Ways

Nice read from July 2015

- Docker and the Three Ways of DevOps Part 1: The First Way – Systems Thinking, how Velocity, Variation and Visualization can provide global optimization.

- Docker and the Three Ways of DevOps Part 2: The Second Way – Amplify Feedback Loops, how visualizing embedded metadata can speed up the time required to correct the defect and reduce the overall Lead Time of the service being delivered.

- Docker and the Three Ways of DevOps Part 3: The Third Way – Culture of Continuous Experimentation and Learning, how “Continuous Learning” and continuous improvement completes the full cycle.

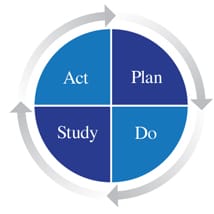

The Third Way: Plan - Do - Study - Act

A small piece from the third article:

Organizations that follow the principle of the Third Way employ a form of experiential learning. Edward Deming, a famous American management thought leader; calls this the Plan Do Study Act Cycle (PDSA). PDSA is rooted in principles of scientific method in that every thing you do is a small experiment.

John refers to Kris Buytaert’s blogpost “Everything is a freaking DNS problem”. Basically a post from an ops-perspective on the DevOps hype and the “Myth of the Full Stack Engineer”. Kris introduces the term “Dull Stack Engineer” because:

Boring is Powerful, Boring is stable", John Topper @ DevOpsDays London

I can totally agree with that. To me it also points out why DevOps should be complemented by SRE to have a stable development environment.

Order Matters

Why Order Matters: Turing Equivalence in Automated Systems Administration, Steve Traugott & Lance Brown

“Order Matters” when we care about both quality and cost while maintaining an enterprise infrastructure. If the ideas described in this paper are correct, then we can make the following prediction:

“The least-cost way to ensure that the behavior of any two hosts will remain completely identical is always to implement the same changes in the same order on both hosts”

Management Methods

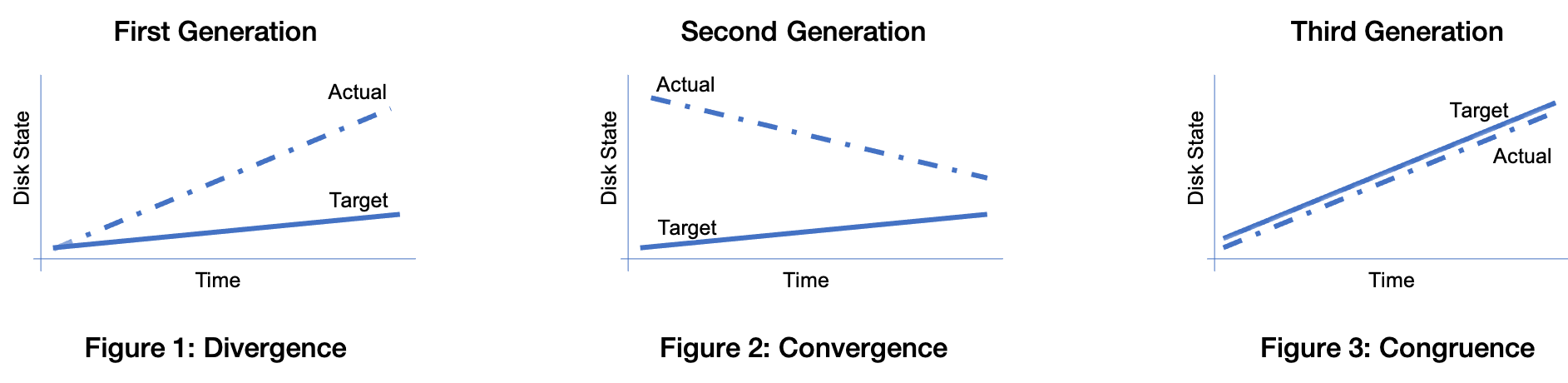

All computer systems management methods can be classified into one of three categories: divergent, convergent, and congruent.

- Divergence (Figure 1) generally implies bad management. Experience shows us that virtually all enterprise infrastructures are still divergent today. Divergence is characterized by the configuration of live hosts drifting away from any desired or assumed baseline disk content.

- Convergence (Figure 2) is the process most senior systems administrators first begin when presented with a divergent infrastructure. They tend to start by manually synchronizing some critical files across the diverged machines, then they figure out a way to do that automatically. Convergence is characterized by the configuration of live hosts moving towards an ideal baseline.

- Congruence (Figure 3) is the practice of maintaining production hosts in complete compliance with a fully descriptive baseline. Congruence is defined in terms of disk state rather than behavior, because disk state can be fully described, while behavior cannot.

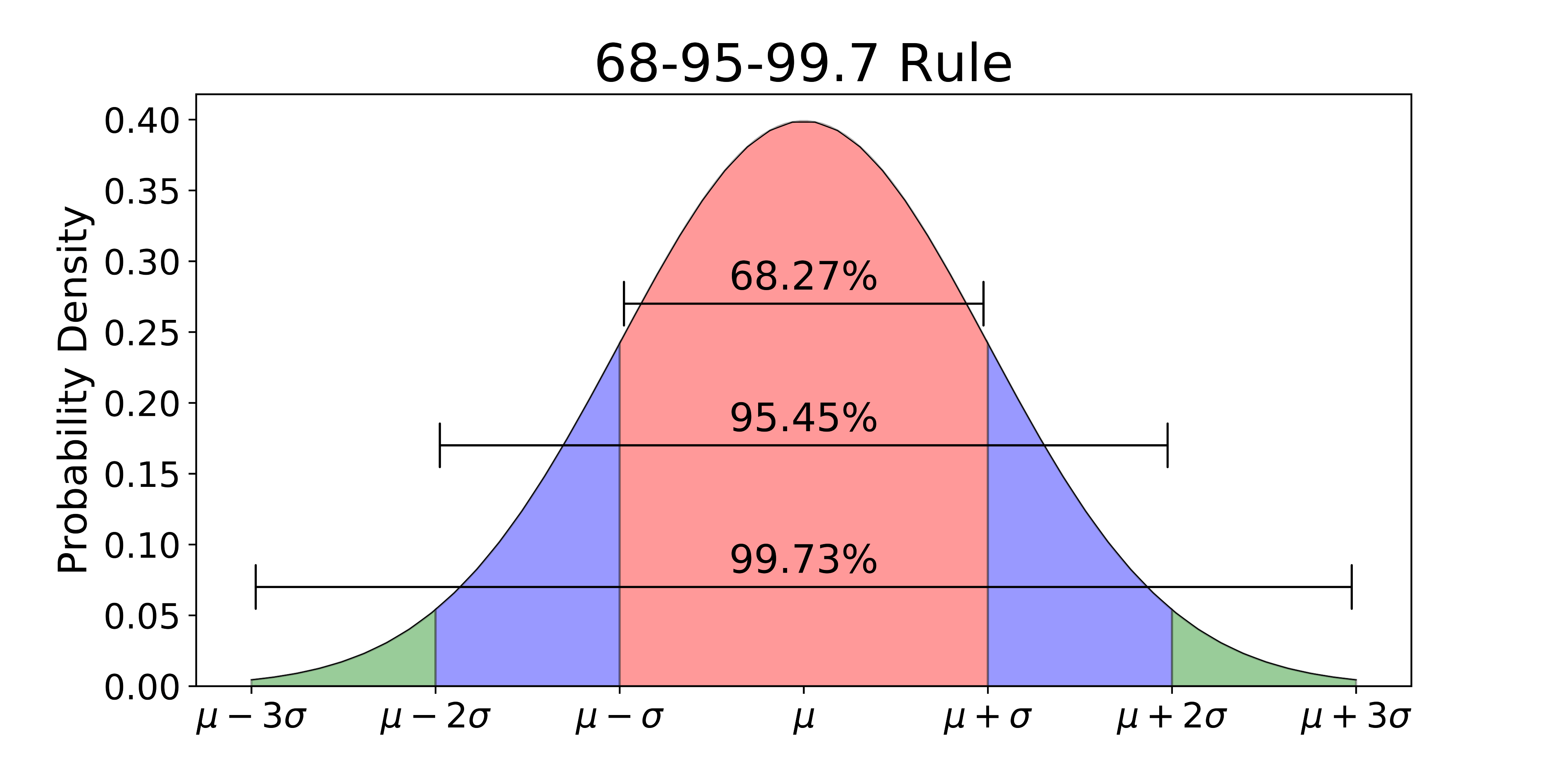

Empirical Rule or 68–95–99.7 Rule

The Empirical Rule is often used in statistics for forecasting, especially when obtaining the right data is difficult or impossible to get. The rule can give you a rough estimate of what your data collection might look like if you were able to survey the entire population.

Variation

- Converged Infrastructure

- Immutable Infrastructure

- Immutable Delivery

Immutable Delivery

Immutable Delivery (Pro’s)

- Least variation pattern

- Faster provision model

- Fits well with Microservices architectures

- Less reliance on Infrastructure as Code

- Binary consistentcy from dev to prod

Immutable Delivery (Con’s)

- DSL abstraction not as mature as Infrastructure as Code

- Small changes are harder to manage

- Debugging is harder

- Needa good model for image management

- Not all delivery models fit well

Third Generation Configuration Management Summary

- Partially Declarative and Partially Descriptive

- Fully Automated

- Disposable Trgeted State

- Cattle Not Pets

- Congruent versus Convergent Environments

- Repeatable and Disposable

- Immutable

References

- Configuration Management for Continuous Delivery - Mark Burgess

- Immutable Server

- Snowflake vs Phoenix servers

Bug types 🐛

In his talk John mentioned the term Heisenbug, this led me to the Heisenbug wikipedia page. On that wikipedia page was a list with several interesting bug types.

- A Heisenbug, is a software bug that seems to disappear or alter its behavior when one attempts to study it.

- A Bohrbug, by opposition, is a “good, solid bug”. Like the deterministic Bohr atom model, they do not change their behavior and are relatively easily detected.

- A Mandelbug (named after Benoît Mandelbrot’s fractal) is a bug whose causes are so complex it defies repair, or makes its behavior appear chaotic or even non-deterministic. The term also refers to a bug that exhibits fractal behavior (that is, self-similarity) by revealing more bugs (the deeper a developer goes into the code to fix it the more bugs they find).

- A Schrödinbug or Schroedinbug (named after Erwin Schrödinger and his thought experiment) is a bug that manifests itself in running software after a programmer notices that the code should never have worked in the first place.

- A Hindenbug (named after the Hindenburg disaster) is a bug with catastrophic behavior.

- A Higgs-bugson (named for the Higgs boson particle) is a bug that is predicted to exist based upon other observed conditions (most commonly, vaguely related log entries and anecdotal user reports) but is difficult, if not impossible, to artificially reproduce in a development or test environment. The term may also refer to a bug that is obvious in the code (mathematically proven), but which cannot be seen in execution (yet difficult or impossible to actually find in existence).

How convenient it is to kill Open Standards

Bernd Erk | @gethash | Icinga

All the technical freedom and diversity we enjoy in our industry is the result of internal, grass root evangelism. Over the last couple of decades, thought leaders have strongly opposed manufacturer-centric strategies and argued the case of Open Source and Open Standards. This ultimately led to the success of Linux and Open Source we have today.

But now, two decades later, the IT industry is in upheaval again: All three major cloud providers have been pushing their serverless solutions in order to lure customers into a new form of vendor lock-in. And they succeeded: The number of serverless deployments has already surpassed those of container based ones.

“So this is how liberty dies … with thunderous applause”

I think there is no time to waste, to remind ourselves about Open Standards, their value to our industry, and why it is worth to fight for them to survive. Open Standards go beyond the boundaries of development and operation. They are the foundation of a barrier free interoperability and independent communications. The lecture aims to inspire the connection between both worlds and paradigms for a modern and flexible application infrastructure.

“Monoliths are the future” - Kelsey Hightower

Kelsey’s unpopular opinion is based om the abuse of microservices. Coming from a monolith it is very easy to create a distributed monolith instead of a true microservice architectured application. Oh and just rearchitecting for Kubernetes is also not the right solution.

Nomad: Kubernetes, without the complexity

Andy Davis | @pondidum | github | andydote.co.uk

This talk is related to his blog post Nomad Good, Kubernetes Bad.

Nomad (Hashicorp)

Nomad is a flexible workload orchestrator that enables an organization to easily deploy and manage any containerized or legacy application using a single, unified workflow. Nomad can run a diverse workload of Docker, non-containerized, microservice, and batch applications.

Nomad enables developers to use declarative infrastructure-as-code for deploying applications. Nomad uses bin packing to efficiently schedule jobs and optimize for resource utilization. Nomad is supported on macOS, Windows, and Linux.

Key features:

- Deploy Containers and Legacy Applications

- Simple & Reliable

- Device Plugins & GPU Support

- Federation for Multi-Region

- Proven Scalability

- HashiCorp Ecosystem

Compared to Kubernetes whith it’s Docker focus, Nomad is more general purpose with support for containerized and standalone applications including Docker.

Gossip protocol

Nomad uses the gossip protocol, a procedure or process of computer peer-to-peer communication that is based on the way epidemics spread. Some distributed systems use peer-to-peer gossip to ensure that data is disseminated to all members of a group.

Secret management, generated by Vault, orchestrated by Nomad.

Hashicorp tools look like one-trick pony’s, very good, relatively simple and clean. Somehow people want to work with more complex unicorn tooling like Kubernetes

Pulumi is force to be reckoned with … Very much in the same space as Terraform.

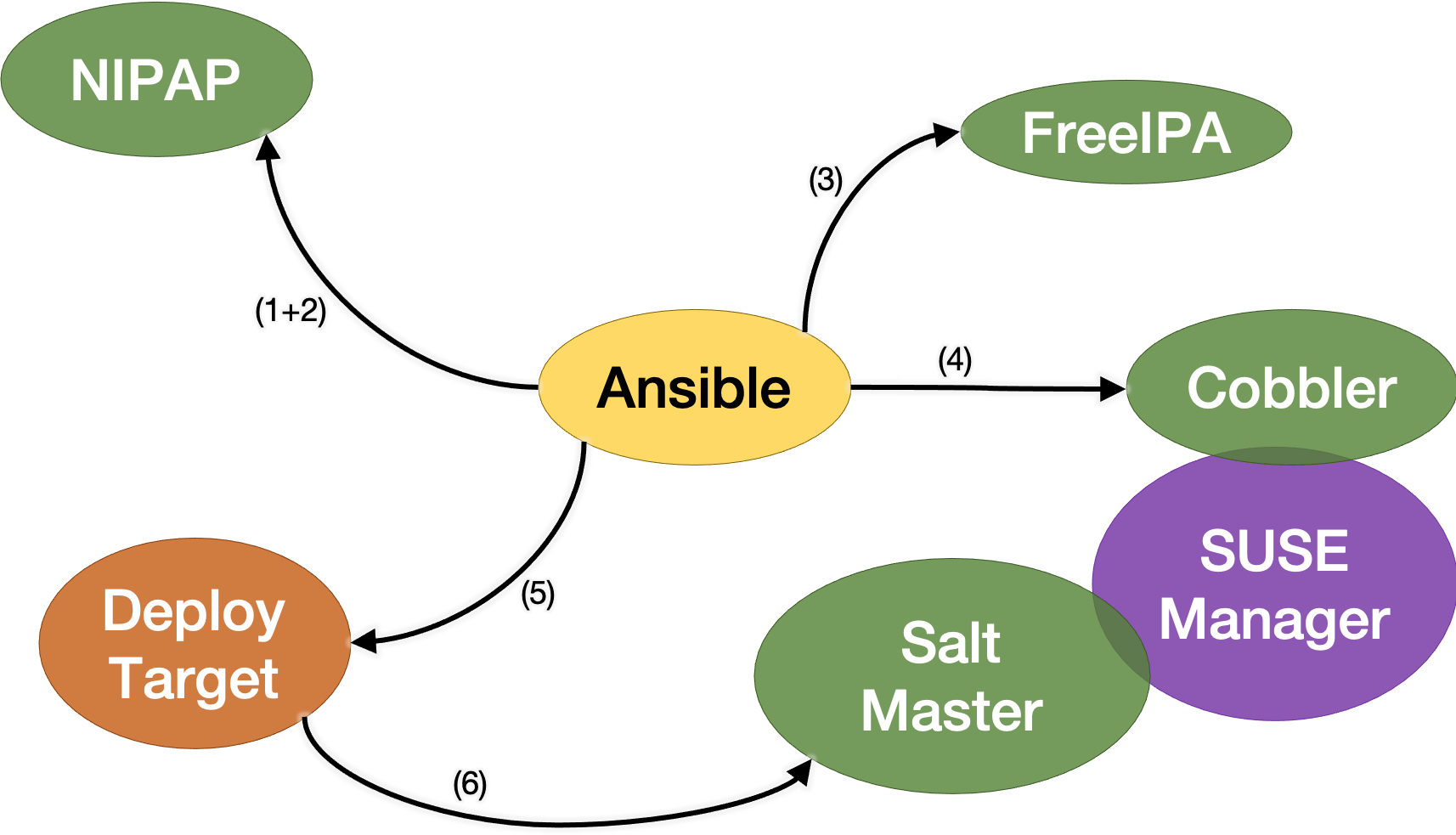

Bare Metal Provisioning with Ansible and Cobbler

An overview of an actual bare metal provisioning scheme powered by Ansible and Cobbler, with support for several Linux flavors and virtual machines.

- generate NIPAP address if needed

- get ful CIDR info from NIPAP

- register machine in IPA

- generate boot medium

- machine joins Salt

NIPAP

NIPAP is a sleek, intuitive and powerful IP address management system built to handle large amounts of IP addresses.

Key features:

- Great UX

- CLI

- Powerful search

- Statistics

- PostgreSQL

- IPv6

- Open Source

- Native VRF support

- Adding Prefixes

NIPAP => “Neat IP-Address Planner”

Hierarchical subnet planning with NIPAP

FreeIPA

FreeIPA is an integrated Identity and Authentication solution for Linux/UNIX networked environments. A FreeIPA server provides centralized authentication, authorization and account information by storing data about user, groups, hosts and other objects necessary to manage the security aspects of a network of computers.

FreeIPA is built on top of well known Open Source components and standard protocols with a very strong focus on ease of management and automation of installation and configuration tasks.

FreeIPA combines Linux (Fedora), 389 Directory Server, MIT Kerberos, NTP, DNS, Dogtag (Certificate System). It consists of a web interface and command-line administration tools.

Cobbler

Cobbler is a Linux installation server that allows for rapid setup of network installation environments. It glues together and automates many associated Linux tasks so you do not have to hop between many various commands and applications when deploying new systems, and, in some cases, changing existing ones. Cobbler can help with provisioning, managing DNS and DHCP, package updates, power management, configuration management orchestration, and much more.

govc

govc is a vSphere CLI built on top of govmomi.

The CLI is designed to be a user friendly CLI alternative to the GUI and well suited for automation tasks. It also acts as a test harness for the govmomi APIs and provides working examples of how to use the APIs.

Designing and building a Large Scale CD system

R.I. Pienaar | rip@devco.net | http://devco.net | @ripienaar

This talk will discuss the design and implementation of a bespoke Continuous Delivery system suitable for delivering packaged software to 100s of thousands of nodes in a developer driven git based deployment scenario.

Choria

Choria is a Orchestration System that is easy to install and operate securely at scale. Puppet compatible defaults means there is very little to configure.

Framework for building management planes that integrate with Puppet.

Probing Ansible Bonds with Molecule tests

Bernhard Hopfenmüller | github/Fobhep | Matthias Dellweg | github/mdellweg | Atix

Molecule

Molecule project is designed to aid in the development and testing of Ansible roles.

Molecule provides support for testing with multiple instances, operating systems and distributions, virtualization providers, test frameworks and testing scenarios.

Molecule encourages an approach that results in consistently developed roles that are well-written, easily understood and maintained.

Molecule supports only the latest two major versions of Ansible (N/N-1), meaning that if the latest version is 2.9.x, we will also test our code with 2.8.x.

Molecule structure:

- templates,

- handlers,

- files,

- vars,

- defaults and

- tasks

Linting Taste

Edit .yamllint in role dir (added by molecule) to adjust to your taste/specs 😉

rules:

docment-start: enable

indentation: {spaces: 2, indent-sequences: consistent}

truthy: enable

ignore: |

.gitlab-ci.yml

Run Molecule Steps

$ molecule [--scenario-name default] <sequence-step>

$ molecule lint # lint

$ molecule create # only create infra

$ molecule list # list created infra

$ molecule converge # run role

$ molecule login # connect with instance to debug

$ moleculeverify # only run testinfra/ansible tests

$ molecule destroy # destroy infra

$ molecule test [--destroy=never]

# run al of the above

dependency:

name: gilt

Alternatives to Galaxy:

- gilt, a git layering tool

- Shell, not recommended

Playbooks:

- create: create.yml

- converge: converge.yml

- destroy: destroy.yml

- prepare: prepare.yml

Prepare is for test tools. Curl for testing a webserver for instance.

Different scenarios to test different things

For each new scenario files are linked with the ln command.

molecule test -s full

Test all in parallel

molecule test -all -parallel

Yaml anchor To re-use pieces of code

Also interesting reads:

- Slides from CfgMgmtCamp 2019, on speeding up Ansible

- Slides from AnsibleFest 2019, Practical Ansible Testing with Molecule